Script to log OverSight camera/mic events on macOS

I’m a huge fan of Objective-See, Patrick Wardle’s non-profit organization, and the arsenal of invaluable macOS secuirty tools he provides.

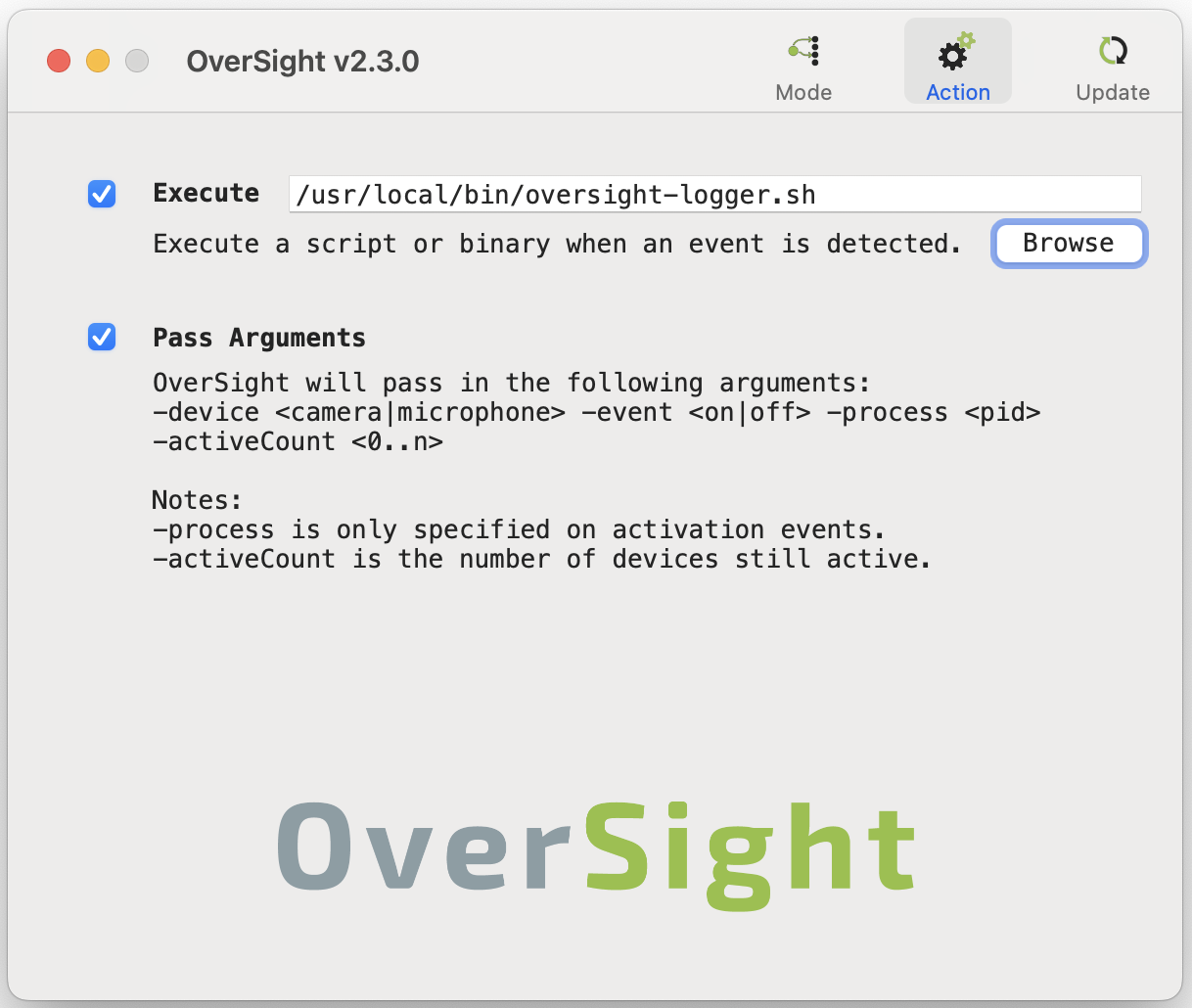

I use Patrick’s OverSight product to monitor camera and microphone activity. By default, OverSight uses notifications to alert you to camera or microphone activity (e.g., when an application activates these devices). In addition to these notifications, I also want to write these events to a log.

OverSight doesn’t have a built-in logging feature, but it does allow you to execute a script when an event is triggered. The Pass Arguments option conveniently passes useful inputs to your script.

oversight-logger is a simple shell script that uses the provided inputs to write sensible log entries when camera or microphone events take place. The only substantive functionality is a pair of functions that look up the process path and process username based on the PID. The resulting log entries will look like this:

2024-12-08 13:45:03 /Applications/zoom.us.app/Contents/MacOS/zoom.us username -device microphone -event on -process 99681 -activeCount 1

2024-12-08 13:45:08 /Applications/zoom.us.app/Contents/MacOS/zoom.us username -device camera -event on -process 99681 -activeCount 2

2024-12-08 13:45:12 NULL NULL -device microphone -event off -activeCount 1

2024-12-08 13:45:12 NULL NULL -device camera -event off -activeCount 0

A napkin drawing.

A napkin drawing.